Level Set: Before we begin it’s important that you understand a few things about Facebook…

First and foremost—Facebook doesn’t exist to make your life better or even to give you a good user experience. It exists to make money for Meta. It does this by learning as much as it can about you, and then selling access to you (via the platform) to other entities that want to reach someone with your perceived attributes.

These entities are usually businesses that want to sell you products or services, but sometimes they are organizations that want to sell you their ideology.

The platform is designed to encourage interaction to keep you on it longer in order to learn about you and have more opportunities to sell things to you. This means that it has no qualms about intentionally manipulating your emotions if getting you angry will keep you on the platform longer. Just like Phillip Morris, it wants you to become addicted to its product.

Successful FB accounts often create fake content to get people to engage. This can be heartwarming stories that simply aren’t true, to those posts that make ridiculous claims like, “Only a genius can name two cities that start with an A” (Spoiler alert, you’re not a genius for coming up with Annapolis and Anchorage), and outrageous “AITA” scenarios. They also will occasionally post things that everyone knows are false or incorrect, merely to get people to correct them (thereby engaging with their content and pushing it out to more people).

AI is also used to create fake stories and fake photos that drive engagement. Don’t comment on them and they will eventually disappear. Only tactics that people “fall for” will continue to get engagement, so if we all only engaged with legitimate content from people we know first-hand, a lot of this BS would just fade away. But as Stormy & KitKat know, that’s easier said than done. (Actually, KitKat is one of the smart ones that never adopted FB.)

NOT THE

BOUNDARY WATERS

There are also fake accounts (bots) and professional trolls whose “job” it is to drive content by engaging other commenters (sometimes by being very nasty). There are also very real trolls (maybe even friends and neighbors) who find themselves so provoked by this content that they lash out in ways that they wouldn’t in real life.

Here’s an example of that last point: While I was doing some research, I came across an old article (dated 2018) informing people that FB had them categorized by political view. If you follow the instructions in the article, you’ll see that this categorization has disappeared—no doubt due to pressure over social media algorithms skewing information during the 2020 election. (I have a ChatGPT conversation that I’ll include at the end of this article, if you’re interested in learning more. It basically confirms what I already suspected. It’s no longer an option, but we’re all still being categorized politically.)

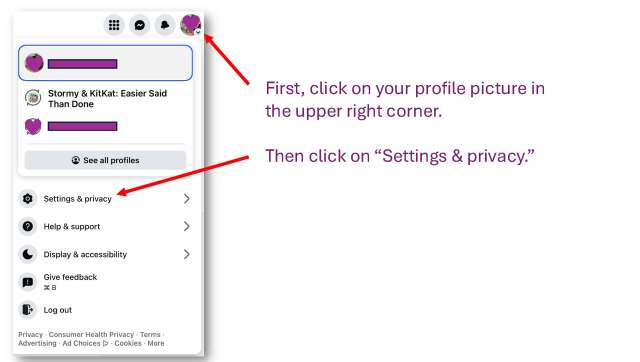

Following are step-by-step instructions for gaining a little more control over what’s in your feed.

Note: These are instructions are for those using Facebook on a desktop (as it’s much easier to work on a larger screen). If you don’t have a desktop, I’m sure you can do these same maneuvers on a tablet or mobile, but the experience is likely different, so you’ll need to experiment.)

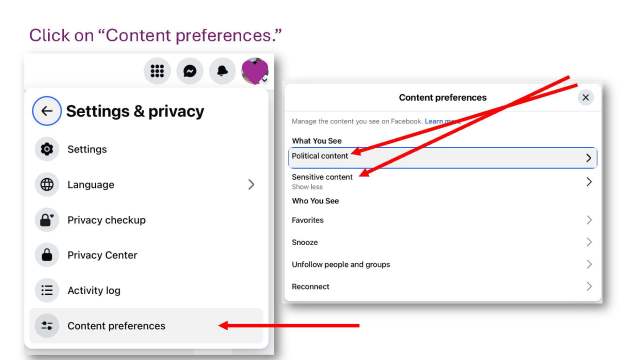

Clicking on “Content preferences” will bring you to the screen below. Here you can click on “Political content” and toggle it OFF if you don’t want to see so many political posts in your feed. (YAY!)

Likewise, you can also toggle “Sensitive content” off if you don’t want your feed to include other disturbing content. Note: If you DO want to see political posts from certain trusted sources, just include those in your Favorites, which you will learn about next.

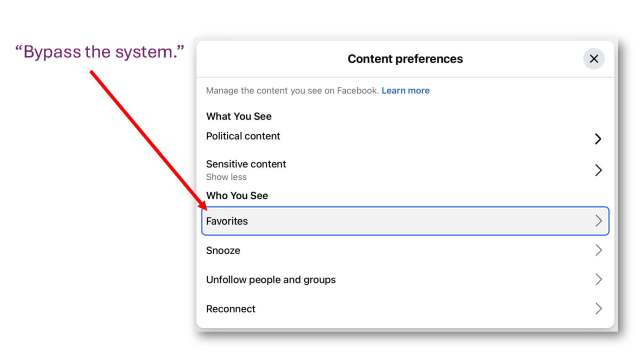

Bypass the System… for real

Remember the viral FB hoax about how you could “Bypass the system” by copying and pasting some verbiage into your post in order to stop seeing the same 25 people in your feed? (Note: Anything instructing you to copy/paste words in order to either change your authority over or privacy on FB is completely bogus. That’s not how the platform works.)

Well, the post was right about one thing: There is an algorithm at play that is controlling whose posts you see most regularly, but the magic number is actually 30. You can find out who these 30 are by clicking on “Favorites.”

You’ll notice that these are the same people or pages that pop up in your feed most frequently and that they change slowly over time. Here’s why: Facebook, by default, “chooses” these based on how frequently you interact with their posts (like, comment, etc.) and—this is important—also how “new” of a FB friend they are. (This is because FB is trying to “cement” your new relationship. Isn’t that thoughtful? 🙄)

If you’ve been on FB a while, you likely have old friends and family members that you added years ago. Unfortunately, if you’re hoping to keep up with what’s going on in their lives via social media, FB is going to instead show you posts by the woman in your yoga class whose friend request you accepted last week.

Here, under “Favorites,” you can legitimately bypass the system by designating your own favorites.

I’m not sure how long FB will honor your choices, but this should work at least temporarily. However, (because I don’t trust FB and neither should you), I would assume that as you add new friends or interact with new pages, they will incrementally be added to your Favorites and some of your previously designated Favorites may fall off.

And now, let’s tackle those pesky ads…

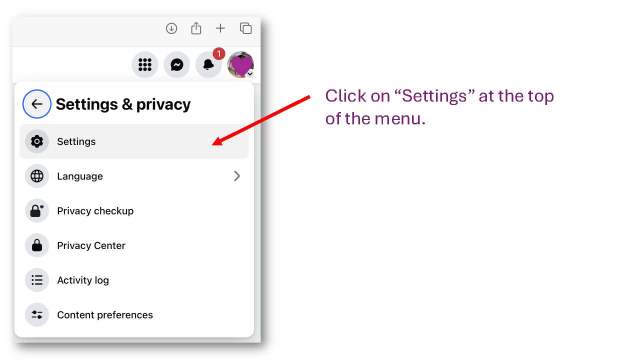

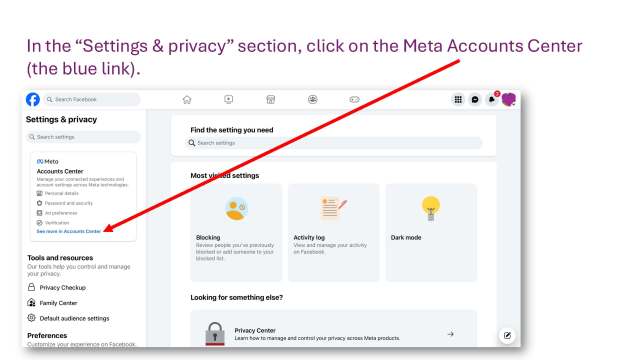

Okay, now you should still be in the “Settings & privacy” section, but instead of clicking on “Content preferences,” we’re now going to click on “Settings” at the top of the menu.

This brings us to a more extensive “Settings & privacy” section. Note the “Meta Accounts Center” box at the left. Click on the blue link.

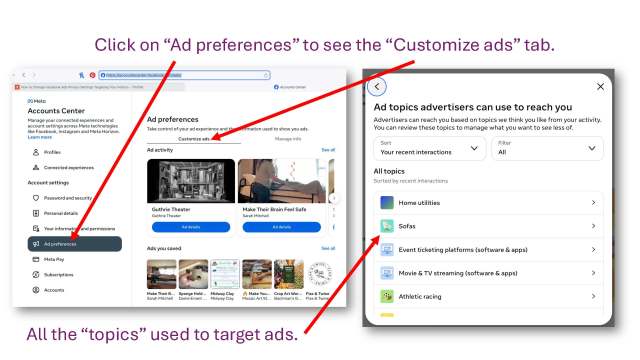

Here is where it gets interesting. Click on “Ad preferences” and look at the tab labeled “Customize ads.” Scroll down on this page and you’ll see what FB has designated as your preferences based on past interactions with ads. There is also a list of “Ad topics,” and you can individually select whether or not you want to see “less of” products related to each category.

Note that you have to select them one at a time and it’s a long list. You also can’t opt to not see them at all…just less. (Two more reasons I hate FB.)

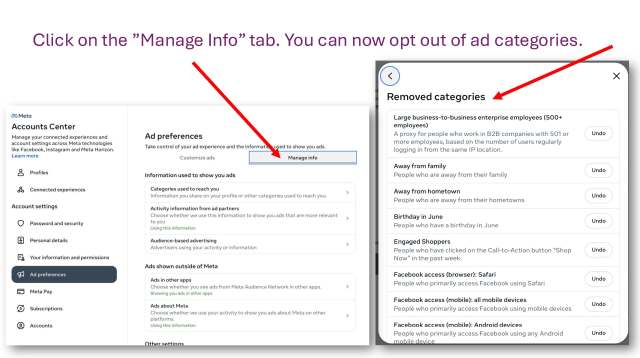

Now we’re going to click on the “Manage Info” tab. This will bring up a long list of all the categories used to target ads to you. You can opt out of these categories (again, you have to do it one-by-one).

Below are all the categories I removed myself from!

It’s also worth noting that FB gets a lot wrong in its assumptions. For example, it had me in categories related to “living away from my family” and “away from my hometown.” Both Oskar and I are originally from the Twin Cities area, and neither of us have ever lived anywhere else!

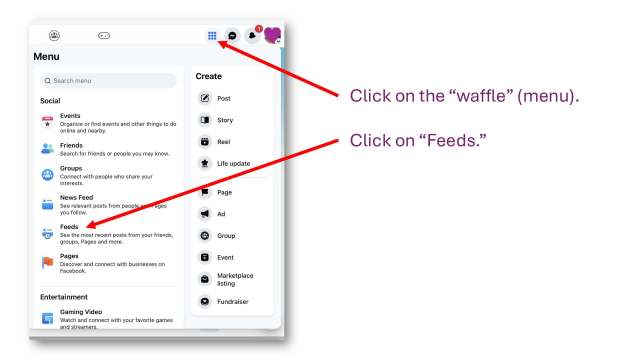

Lastly, you can click on the “waffle” (menu), which will bring up this screen.

If you then click on “Feeds,” you can see the one marked “Friends.” This is where you’ll see ALL of your friend posts (beyond just the “Favorites”) without all the random posts for groups or pages that FB thinks you might be interested in.

However, you’ll notice there are still ads/sponsored posts mixed in among them. (Sigh.)

What now?

If you’ve followed these instructions, you should now have a little more control over your feed. Although you probably realize that your control is limited and possibly temporary. The best advice I can give you is to revisit these pages occasionally. See what’s new and what has changed. But even better advice is to retain some healthy skepticism when using FB and limit your use.

A deeper dive – A conversation with ChatGPT about Facebook targeting

Prompt: A 2018 Thrillist article (https://www.thrillist.com/tech/nation/facebook-ads-classify-users-political-views-how-to-change) cites Facebook classifying users but the designation mentioned in the article no longer exists. When did Facebook change it and why?

Chat GPT Response: Here’s what happened with the “you’re labeled as liberal/conservative” political-view classification Facebook used to show in your Ad Preferences — the setting Thrillist described in 2018 — and when and why it changed:

📉 What the Thrillist Article Was Describing (2018)

In 2018 Facebook did infer users’ political leanings (e.g., “Very Liberal,” “Moderate,” or “Conservative”) and put those in a user’s Ad Preferences page based on activity and inferred interests. That let advertisers see and (in some cases) targetusers using related interest categories.

That inference category was part of a broader set of “Detailed Targeting” attributes Facebook assigned to user profiles based on their behavior, likes, page interactions, and inferred interests.

🗓 When Did Facebook Remove That Type of Classification?

Facebook (now Meta) began removing the ability for advertisers to target users based on inferred political views and other sensitive categories in late 2021 / early 2022.

Key points:

- In November 2021, Meta announced it would eliminate ad targeting options related to “sensitive” characteristics— including political affiliation, religion, sexual orientation, race, and health issues.

- These changes went into effect on January 19, 2022. Targeting options tied to interests like political ideology and beliefs were removed from the Detailed Targeting menu in Meta Ads Manager.

- Ads already running with those targeting options continued for a transitional period, but after March 17, 2022, Meta fully stopped serving ads using those removed categories.

This means by early 2022, the political-leaning interest categories that Thrillist showed users how to find were no longer available for advertisers, and related profile labels were no longer displayed in the same way.

🤔 Why Did Facebook Remove It?

Meta said the change was intended to reduce the misuse and abuse of ad-targeting features — especially categories that could be perceived as sensitive, discriminatory, or open to manipulation. Advertisers could discriminate or spam users by targeting too precisely on topics like politics or religion.

The company responded to input from:

- Civil-rights groups

- Policymakers concerned about discrimination

- Media reports like ProPublica showing misuse of targeting tools

Meta’s official reasoning was that removing these targeting options would protect users and avoid the negative externalities associated with overly granular targeting based on personal attributes.

📌 What This Change Means Today

- Users no longer see political-view labels (like “conservative” or “liberal”) in Ad Preferences the way the 2018 article described.

- Advertisers can still run political ads (in most regions), but they can’t target audiences based on inferred political ideology or other “sensitive” categories using those old interest flags.

- Meta has instead shifted toward broader targeting and algorithmic delivery, relying more on machine learning signals rather than explicit detailed categories. (This shift is ongoing, and many advertisers notice that “interest” categories have faded in importance or been consolidated.)

Summary:

Facebook’s ability to classify users by a political-view label that advertisers could see was effectively phased out starting in late 2021, with the changes fully implemented around early 2022, because Meta removed “sensitive” Detailed Targeting options (including political affiliation) after concerns about abuse and discrimination.

If you’d like, I can also explain how Facebook/Meta still infers user traits today (even without explicit targeting options) and what that means for ad delivery and personalization.

Stormy’s Prompt: Yes, please do.

Great — this is where it gets more nuanced.

Even though Meta removed explicit “sensitive” targeting categories (like political affiliation) from advertiser menus in early 2022, that does not mean Meta stopped inferring user traits. It changed how those inferences are used and exposed.

Here’s how it works now:

1️⃣ Explicit Labels vs. Machine Learning Prediction

Before 2022:

- Advertisers could select categories like “Very Liberal” or “Conservative” in Detailed Targeting.

- Those were explicit interest buckets visible in Ads Manager.

After 2022:

- Those explicit categories were removed.

- But Meta still uses machine learning models to predict user behavior and engagement patterns — including political engagement tendencies — for ad delivery optimization.

In other words:

❌ Advertisers can’t select “Conservative voters.”

✅ Meta’s algorithm can still learn who is likely to engage with a given political message.

The classification hasn’t disappeared — it’s just moved from a manual targeting tool to a black-box optimization system.]

2️⃣ How Meta Infers Traits Today

Meta builds predictive models using signals such as:

- Pages you follow

- Posts you engage with

- Videos you watch (including how long)

- Groups you join

- Ads you click

- Off-platform browsing data (via Meta Pixel / Conversions API)

- Demographic and geographic data

From those signals, the system predicts things like:

- Likelihood to click political content

- Likelihood to donate

- Issue interest (climate, immigration, gun rights, etc.)

- Likelihood to share partisan content

Even if “political affiliation” isn’t a selectable targeting category, the delivery system can still optimize ads toward users who historically respond to certain political themes.

3️⃣ The Shift: From “Targeting” to “Optimization”

Meta has publicly framed this as a move away from “sensitive targeting.”

In practice, what changed is:

Old model (pre-2022):

- Advertiser manually picks interest categories.

- Targeting is transparent and discrete.

New model (2022–present):

- Advertiser chooses broad audiences.

- Meta’s AI decides who within that audience sees the ad based on predicted response.

This is called algorithmic delivery optimization.

So while a campaign can’t say “show this to conservatives,” it can:

- Upload a donor list (Custom Audience)

- Ask for a “Lookalike Audience”

- Optimize for “engagement” or “donations”

The system then finds people who behave similarly — which can strongly correlate with political identity.

4️⃣ What This Means for Political Advertising

For political advertisers specifically:

- Meta still allows political ads in the U.S.

- Advertisers must go through identity verification.

- Ads are stored in the public Ad Library.

- Detailed targeting based on “sensitive” interests is prohibited.

However:

- Broad targeting + optimization often recreates segmentation patterns.

- Researchers have found algorithmic delivery can produce partisan sorting even without explicit targeting inputs.

That’s a key distinction regulators are still wrestling with.

5️⃣ Are Political “Scores” Still Assigned Internally?

Meta has not publicly confirmed maintaining explicit ideological labels like “Very Liberal” in the same way users once saw in Ad Preferences.

But:

- The system must internally model engagement likelihood.

- That modeling necessarily involves behavior patterns that correlate strongly with political ideology.

- In machine learning terms, ideology may function as a latent variable — even if it’s no longer a visible category.

So while the label disappeared, predictive inference likely did not.

6️⃣ Why Meta Made This Shift

There were three main pressures:

- Civil rights concerns (discrimination via targeting)

- Political backlash after 2016 and 2020 elections

- Regulatory scrutiny (U.S. and EU)

By removing explicit “sensitive” targeting options, Meta could argue it reduced the risk of discriminatory ad targeting — while preserving performance-based ad delivery.

7️⃣ The Big Structural Reality

This is the core tension:

Removing explicit categories does not eliminate predictive inference in AI-driven advertising systems.

Modern ad platforms don’t need labeled buckets — they predict behavior directly.